Sparse-MDI

Adaptive Sparsification for Distributed Edge Inference

Reducing communication overhead in edge AI through intelligent network aware adaptive sparsity.

About the Project

Our research focuses on optimizing distributed edge AI systems through novel techniques

What is Sparse-MDI?

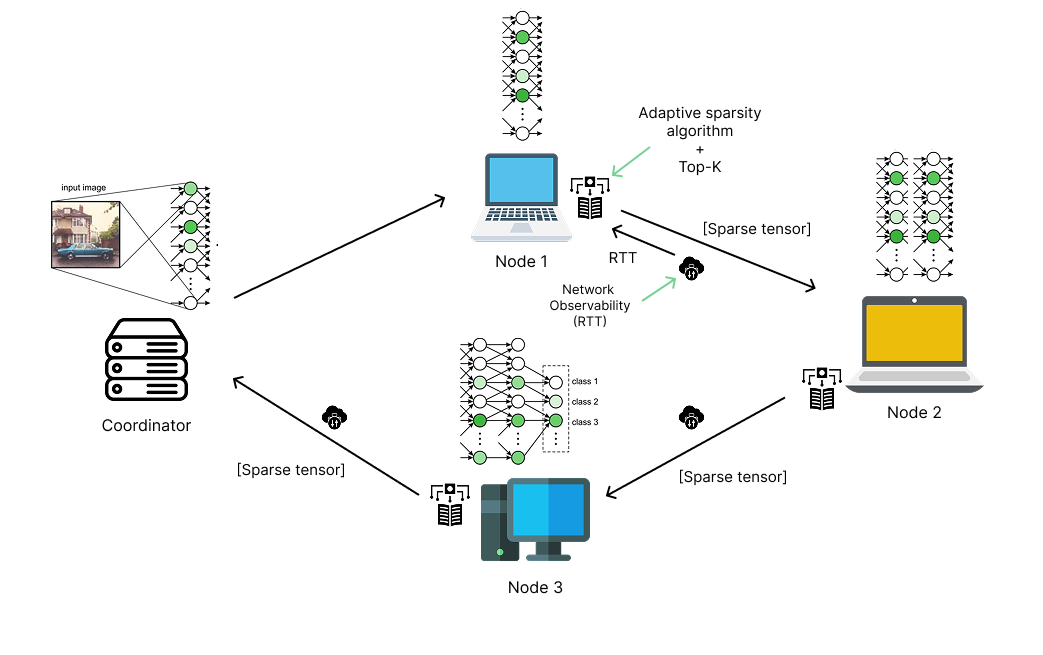

Sparse-MDI (Sparse Model Distributed Inference) is a research project built to optimize communication during inference in edge AI systems. By leveraging adaptive sparsification techniques, our approach significantly reduces the bandwidth requirements and latency challenges in distributed inference scenarios.

Our system addresses key challenges in edge AI deployment including high latency, bandwidth usage, and scalability concerns, making it suitable for resource-constrained environments like IoT networks and mobile device clusters.

Project Aim

This research aims to develop and evaluate a novel adaptive sparsification algorithm to optimise communication overhead in MDI while dynamically adapting to varying network conditions. Additionally, the research seeks to design and implement a peer-to-peer (P2P) MDI framework integrating the proposed algorithm, enabling real-world evaluation of its effectiveness.

Distributed Inference

Enables AI inference directly on distributed networks in edge networks, reducing cloud dependency and latency.

Optimized Communication

Reduces bandwidth requirements through adaptive sparsification techniques.

Improved Latency

Decreases response times for real-time applications through distributed processing.

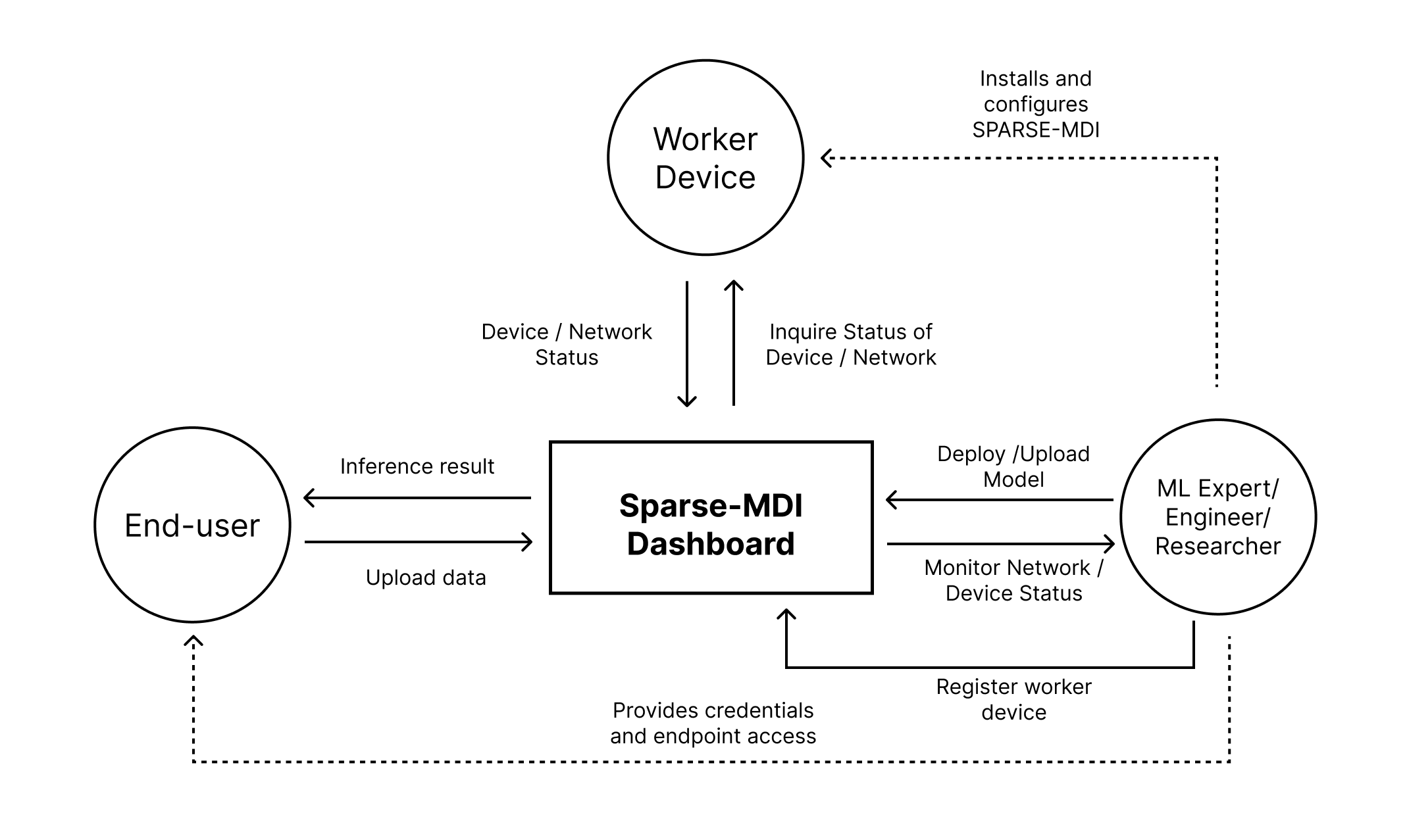

GUI for Deployments

Sparse MDI comes with a interactive dashboard for deploying node configurations

Design Overview

This section presents the design and architectural foundation of the Sparse-MDI framework, with a focus on its design goals, system architecture, and adaptive sparsification algorithm.

Design Goals

Key objectives guiding the development of Sparse-MDI framework

| Design Goal | Description |

|---|---|

| Ease of Use | The system should be intuitive and easy to use with minimal learning curve. User interfaces must be intuitive for end-users. |

| Maintainability | The system must be extensible for new features and maintainable with good coding practices following proper coding standards. |

| Performance | The framework must be designed in a performance-oriented manner to maintain consistent model performance while minimizing the impact from unstable network conditions. The dashboard must also be responsive for optimal user experience. |

| Reusability | Components must be built in a flexible manner, allowing easy replacement for experimentation or improvement. |

| Scalability | Sparse-MDI must be scalable to support any practical number of nodes and handle models with moderate complexity, provided the cumulative resources meet the requirements. |

Framework Evaluation

A comprehensive overview of the Sparse-MDI framework testing results, highlighting the effectiveness of adaptive sparsification for distributed model inference.

Testing Overview

Communication Gain Across Models

Percentage reduction in communication overhead at different sparsity levels

ResNet-50 with 2-node configuration achieved 51.22% communication gain at 0.8 sparsity level, while MobileNet-V2 in 3-node configuration reached 46.61% gain.

Key Communication Findings

Summary of communication overhead reduction results

| Model Configuration | Max Communication Gain | Optimal Sparsity Level | Notes |

|---|---|---|---|

| ResNet-50 (2-node) | 51.22% | 0.8 | Consistent gains across sparsity levels |

| ResNet-50 (3-node) | 11.95% | 0.8 | Lower gains due to partitioning strategy |

| MobileNet-V2 (2-node) | -0.21% | N/A | Anomalous behavior with low-dimensional tensors |

| MobileNet-V2 (3-node) | 46.61% | 0.8 | Substantial gains with minor accuracy impact |

Testing Limitations

Constraints and considerations for interpreting test results

Test Environment

Testing was conducted on consumer hardware (Apple M2 with 8GB RAM) using Docker to emulate distributed systems, which may not fully represent real-world edge deployments.

Scope Constraints

Due to time and resource limitations, certain scenarios were deprioritized or omitted, including comprehensive Sparse vs. Adaptive analysis and deployment on actual edge devices.

Network Simulation

Network conditions were simulated rather than tested in real-world environments, which may impact the applicability of findings to actual deployment scenarios.

Further testing in real-world edge deployments is recommended to validate the framework's performance under authentic conditions.

Limitations & Future Directions

An assessment of current constraints and potential avenues for extending the Sparse-MDI framework and adaptive sparsification research.

Research Context

Framework Limitations

Current constraints of the Sparse-MDI implementation

Research Contributions

Current and potential contributions to the body of knowledge

Research Domain

The novel adaptive sparsity algorithm represents a significant contribution to the field of distributed AI inference, demonstrating how dynamic sparsification can balance communication efficiency and model accuracy in varying network conditions.

Technical Domain

The Sparse-MDI framework and dashboard provide practical tools for implementing and monitoring adaptive sparsification in distributed inference scenarios, offering a foundation for further research and development.

Supporting Resources

Pre-partitioned model repositories, dataset generation scripts, and CNN partitioning utilities offer valuable resources for researchers in the field, facilitating reproducibility and extension of this work.

By addressing the identified limitations and pursuing the outlined future directions, the impact and utility of these contributions can be significantly expanded.

Documentation

Access comprehensive documentation about Sparse-MDI

Contact Us

Get in touch with our research team or explore collaboration opportunities

Send us a Message

Fill out the form below and we'll get back to you as soon as possible.

Contact Information

Alternative ways to reach us

dovh.me@gmail.com

Phone

+94 (070) 277-6085

Location

Faculty of Computing

Informatics Institute of Technology

57 Ramakrishna Rd,

Colombo 00600, Sri Lanka